Table of contents

Open Table of contents

Introduction

In my previous post, I shared how we built a production-ready risk assessment system using Claude Code, taking it from a lovable.ai prototype to deployed infrastructure. What started as an experiment became a fundamental shift in how I approach software development.

After months of intensive use, pushing Claude Code to its limits across frontend, backend, infrastructure, and data pipelines, I’ve discovered something crucial: the more powerful these tools become, the more important our uniquely human capabilities become. AI excels at implementation, but architecture, judgment calls, and strategic thinking remain fundamentally human.

This guide distills practical strategies from building production systems with AI coding assistants. Whether you’re an AI coding skeptic, a casual user looking to level up, or someone already deep in the trenches, these battle-tested approaches will help you work more effectively with these tools.

Claude Code - AI coding assistant that lives in your terminal

Claude Code - AI coding assistant that lives in your terminal

Fair warning: this is a living document. As AI coding tools evolve at breakneck speed, so must our workflows. What works today might be obsolete next month. But the principles remain constant even as capabilities expand: clear communication, strategic thinking, quality control, and human judgment.

Let’s dive into what works when coding with AI is your daily reality.

Strategies for AI-Assisted Development

Moving from code writer to building systems using AI requires new approaches to planning, quality control, and workflow management.

Mindset Shifts

Architecture-first thinking

In my experience working with Claude Code, I saw a shift in my responsibilities toward architecting systems while the AI handled implementation mechanics. In this new paradigm, value lies in understanding how different components interact, where bottlenecks will emerge, and which patterns will scale. The coding agent excels at turning these decisions into working code, but it needs you to provide the architectural vision.

Focus shifts to system orchestration rather than syntax. Questions like “Should this be a microservice or a monolith?” or “How will this handle 10x traffic?” become your primary concerns. The AI can implement either approach competently once you’ve made the decision based on business constraints and future requirements.

This changes how you approach problems. Instead of starting with “How do I implement this?”, start with “What are we really trying to solve?” Define the boundaries, identify the integration points, and establish the data flow. Design the data models, API contracts, and system interactions first.

From writing code to writing specifications

You’ve heard it before: think before you code. But with AI coding assistants, this advice has transformed from a nice-to-have to a critical skill. Before, jumping straight into code meant maybe some refactoring later. Now, vague instructions to your coding agent can send it building entire systems in the wrong direction, burning through tokens and creating architectural debt that compounds quickly.

Instead of jumping into implementation details, you need a clear picture of system design and goals before any code gets written. Writing specifications forces this clarity and creates a shared language between you and your coding agent, and also between your human team members and their own coding agents.

Treat specifications as first-class deliverables. Save them in your codebase, create different specs for different components, and put them under version control. When your teammates are also working with coding agents, these specifications become the alignment layer that keeps everyone building toward the same architectural vision.

In Practice: : Have a docs/specs folder and treat it as importantly as src/.

Let’s say you’re designing a user authentication system and need help setting out the specifications. Using your coding agent, instead of prompting: “Build user authentication”, start with a specification design session:

Help me design a specification for user authentication. I need to support:

- Email/password login

- OAuth with Google and GitHub

- Role-based permissions (admin, user, guest)

- Session management

- Password reset flow

Walk me through the key architectural decisions we need to make, including database schema, security considerations, and API design. Let's discuss tradeoffs for each approach.Your coding agent will help you think through:

- Database schema design (separate user profiles vs embedded roles)

- Token strategy (JWT vs session-based)

- Security patterns (password hashing, rate limiting)

- API contract design

- Error handling approaches

Once you’ve worked through these decisions together, save the resulting specification as auth-system-spec.md. Now when you’re ready to implement, your coding agent has the full context and architectural constraints to build exactly what you need.

AI Pair Programmer

Think of coding agents as incredibly talented junior developers with encyclopedic knowledge but limited business context. They need you to understand why a feature matters, what edge cases users will hit, and which technical debt is acceptable given your timeline. These judgment calls remain fundamentally human.

The productivity gains are real when your coding agents focus on the right tasks. Boilerplate code, test generation, refactoring for consistency, DevOps scripts, documentation updates, centering that div: these time sinks can largely disappear from your workflow. But you need to direct this productivity toward valuable outcomes.

Effective collaboration requires constant dialogue. Review the AI’s suggestions, question its assumptions, and iterate on the approach. When it proposes a complex abstraction for a simple problem, push back. When it takes shortcuts that will haunt you later, catch them early. Don’t blindly accept everything your coding agent suggests. Help it help you.

With implementation details automated, engineers can focus on what matters: understanding user needs, collaborating with stakeholders, mentoring teammates, and thinking strategically about technical direction. The boring parts get automated, but the human parts become more important than ever.

Continuous Learning

Every interaction with your coding assistant is a learning opportunity. When it suggests an unfamiliar pattern or library, take time to understand why. When it refactors your code, study what changed. These tools expose you to approaches and best practices you might not encounter otherwise.

Use AI to accelerate learning in unfamiliar domains. Working on a React frontend when you’re a backend developer? The AI can guide you through modern patterns while you contribute the business logic. Building infrastructure when you’re primarily an application developer? Let the AI handle Terraform syntax while you learn the architectural principles.

Be actively engaged with the process. Don’t just accept working code; understand it. Ask it to explain its choices, compare different approaches, and walk you through the tradeoffs.

When I needed to implement WebSocket connections for real-time updates, I asked Claude Code not just to implement it, but to explain the different approaches (polling vs WebSocket vs Server-Sent Events), their tradeoffs, and why it recommended a particular solution for our use case. I walked away with working code and deeper understanding.

Quality Control Fundamentals

Review everything

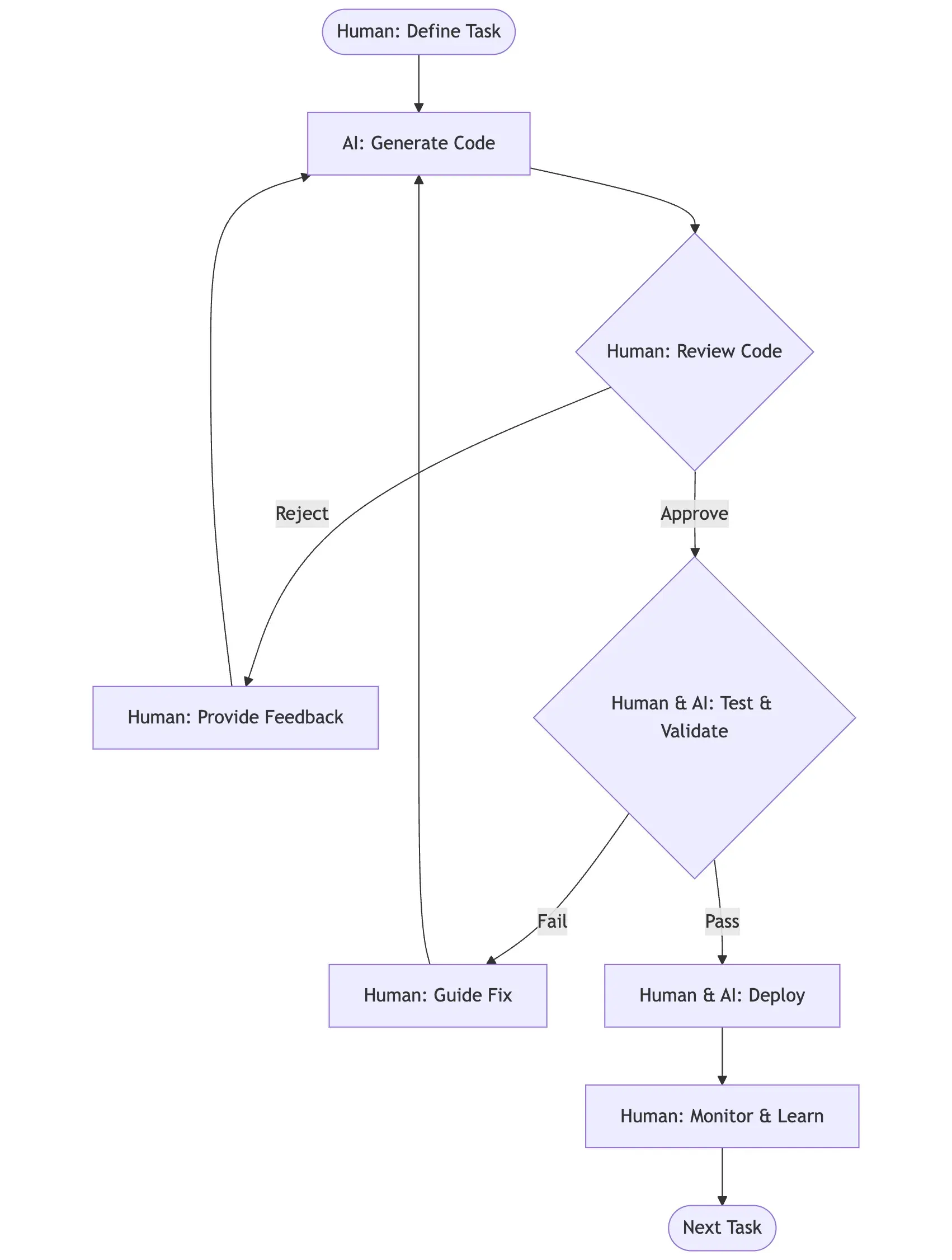

Human & AI Review Workflow - Systematic quality control with human oversight

Human & AI Review Workflow - Systematic quality control with human oversight

I may sound like a broken record, but the biggest takeaway from this post is this: Be actively engaged. AI-generated code requires active, engaged review. Every line should make sense to you. When it doesn’t, stop and investigate. The temptation to rubber-stamp working code is strong, especially when deadlines loom, but this leads to codebases you don’t understand and can’t maintain, and that blow up at the most inopportune moments.

Trust your gut!

Experienced developers develop an instinct for code smell. When something feels off, even if it works, investigate. AI coding assistants can produce syntactically correct code that violates best practices, creates maintenance nightmares, or solves the wrong problem entirely. Your intuition, built from years of debugging and maintaining systems, remains invaluable.

Catch shortcuts early

AI assistants optimize for making tests pass and errors disappear. Without clear direction, they’ll take the path of least resistance. Common shortcuts to watch for:

- TypeScript

anytypes appearing when proper typing gets complex - Tests getting commented out or skipped when they’re hard to fix

- Quick fixes that address symptoms rather than root causes

Example: “Let’s use any type for now and fix it later” should trigger immediate review. That “later” rarely comes, and type safety erosion spreads quickly through a codebase.

Technical debt awareness

Grady Booch’s tweet about where AI-generated codebases are heading.

Grady Booch’s tweet about where AI-generated codebases are heading.

AI can generate code faster than you can review it, making technical debt accumulation a real risk. Set up systems to track what gets generated and schedule regular cleanup sessions. Key areas to monitor from my experience:

- Duplicate type definitions or interfaces across files

- Stale files from abandoned approaches or refactoring attempts

- Over-engineered abstractions for simple problems

- Inconsistent patterns when the AI uses different solutions for similar problems, when your team members use different coding agents, or when the coding agent lacks awareness of system specifications

- Dependencies added but never fully utilized

Regular cleanup sessions prevent these issues from compounding. I schedule weekly reviews specifically for AI-generated code, looking for patterns to consolidate and abstractions to simplify (also using AI, of course).

Collaboration Strategies with Coding Agents

Be exact and specific

Lazy prompting leads to misaligned solutions and wasted tokens. While AI coding assistants include sophisticated prompt expansion and agentic workflows behind the scenes, your specificity remains the biggest determinant of output quality. The clearer your instructions, the less interpretation the AI needs to do.

In Practice: Instead of “implement user authentication,” I now write: “Build JWT-based auth with these requirements: Access tokens expire in 15 minutes, refresh tokens in 7 days. Store refresh tokens in Redis with user ID as key. Middleware should validate tokens on all /api routes except /api/auth/*. Return 401 with clear error messages for expired vs invalid tokens. Use Supabase for user management but handle our own JWT generation.”

Reference exact file paths, function names, and class definitions. Instead of “update the user service,” specify “modify the UserService class in src/services/user.service.ts, specifically the updateProfile method.” This precision saves tokens on searching and reduces the chance of modifications to the wrong code.

Include constraints and non-functional requirements upfront. Mention performance considerations, security requirements, and coding standards in your initial prompt or through system-wide documentation (more on this in a moment), rather than fixing them in subsequent iterations.

Request explanations

As mentioned in the pair programmer section, make “explain your approach” part of your standard workflow. Before approving any non-trivial change, ask the AI to walk through its reasoning. This serves two purposes: you catch flawed logic early, and you deepen your own understanding of the solution.

Questions to ask regularly: “Why did you choose this pattern over alternatives?” “What are the tradeoffs of this approach?” “How does this handle edge cases?” “What assumptions are you making about the system?”

Multi-LLM validation

Different AI models have different strengths and blind spots. When facing complex bugs or architectural decisions, get second opinions.

Create a workflow for critical decisions: propose solution with AI #1, validate approach with AI #2, implement with your preferred coding assistant. This cross-validation catches more issues than any single tool would. Example: use Claude to create a plan, then review it using GPT-4o.

Sometimes it’s worth thinking outside the box. Literally. When you’re facing foundational questions or mulling over a fundamental decision, your current codebase may pollute your coding agent’s thinking. In these cases, use regular Claude or ChatGPT to think things through.

This strategy particularly shines for debugging. When one AI gets stuck in a solution path, another might immediately spot the issue. I’ve solved numerous “impossible” bugs by simply explaining the problem to a different AI model without burdening it with the context.

Workflow Optimization

Plan-first approach

Beyond systems design, it’s worth asking your coding agent to create a detailed plan for any changes that touch multiple parts of your codebase. A good example is any major refactoring task. This forces both you and the agent to think through the approach before committing to code. A good plan includes the sequence of changes, files that will be modified, new files to create, and potential risks or dependencies.

Review this plan critically. Look for over-engineering, missed requirements, or approaches that don’t align with your existing architecture. Modify the plan until it matches your vision, then save it as a markdown file in your project. Make sure to include clear tasks or todos in your markdown document.

Track progress against this plan systematically. Ask the coding agent to update the checklist of tasks as it completes each phase. This creates natural checkpoints for review and prevents the AI from wandering off course. This is in addition to the automated checklists tools like Claude Code use when executing your prompt.

A systematic, plan-first approach to AI-assisted development

A systematic, plan-first approach to AI-assisted development

Example: For a recent refactoring to use shared types, I had Claude Code create a plan with specific tasks: creating a new module for shared types, scripts for automated type generation, and frontend and backend codebase changes. I saved this as shared-types-refactoring.md and updated it after each major milestone. When I hit issues (and I did frequently), I could trace back to see where we deviated from the original design.

Context management

Long conversation threads degrade AI performance. As context grows, the AI loses track of earlier decisions, starts referencing outdated code, and makes inconsistent choices. Start fresh conversations for distinct features or major refactoring efforts.

Create clear boundaries between work sessions. When switching from backend API work to frontend implementation, start a new conversation. Include a brief context setter: “We just finished implementing the new module for shared types (see shared-types-refactoring.md). Now let’s update the frontend to use the auto-generated TypeScript interfaces.”

Documentation discipline

Treat AI instructions as living documentation. Create a claude.md or .ai-instructions file in your repository root. Include coding standards, architectural patterns, common pitfalls, and project-specific conventions. Update this file as you discover new patterns that work well.

Document not just what patterns to use, but why. When you establish a convention like “always use dependency injection for services,” explain the reasoning. This helps both AI and human developers understand the intent behind the rules.

Include anti-patterns explicitly. If you’ve discovered the AI tends toward certain problematic solutions, document what to avoid. “DO NOT create separate interface files for every class” or “AVOID nested ternary operators” can save hours of cleanup.

Practical Claude Code Tips

Anthropic’s engineering team has published an excellent guide on Claude Code best practices that covers the technical foundations thoroughly. Their key recommendations include:

- Create

CLAUDE.mdfiles to document project-specific context, coding standards, and common commands that automatically load into every conversation - Customize tool permissions to skip repetitive approvals while maintaining security boundaries

- Use MCP (Model Context Protocol) to extend Claude’s capabilities with external tools and services

- Develop iterative workflows: explore → plan → code → commit, with explicit verification steps between phases.

Be sure to read their guide on how to get the best out of Claude Code. In addition to these tips, let’s explore some additional patterns that emerge when using Claude Code with real teams and evolving codebases.

Strategic Use of Documentation

You can use CLAUDE.md file as your coding agent’s development guide that evolves with your codebase.

When you initialise your claude code project, Claude.md will contain an overview of your project and some basic instructions. Beyond the auto-generated stuff, structure your documentation to encode architectural decisions, design patterns, and tribal knowledge. When one team member discovers an effective pattern or solution, documenting it immediately makes that knowledge available to everyone’s AI assistant.

If you’re using multiple documents for specifications, task progress etc, make sure to include the paths to these documents in Claude.md so that claude code can find them when needed.

In Practice: Our CLAUDE.md evolved into a navigation hub for our AI assistant. Instead of cramming everything into one file, we created a documentation architecture:

Below is an example of what this looks like in the CLAUDE.md file.

## Project Documentation Map

- System Architecture: `/docs/architecture/system-design.md`

- API Specifications: `/docs/specs/api-v2-spec.md`

- Frontend Component Guide: `/docs/specs/component-patterns.md`

- Current Sprint Plan: `/docs/plans/sprint-15-plan.md`

- Migration Progress: `/docs/plans/database-migration-status.md`

## Active Work Contexts

When working on authentication: See `/docs/plans/auth-redesign-plan.md`

When refactoring agents: See `/docs/plans/agent-refactor-plan.md`

## Team Conventions

- Always update the relevant plan in `/docs/plans/` files when completing major tasksTeam-Wide Slash Commands

Custom slash commands become powerful when shared across your team. They encode workflows, standardize processes, and ensure consistency regardless of who’s coding. The key is identifying repetitive team patterns and turning them into executable commands.

Create commands that bridge the gap between AI capabilities and your team’s specific needs. Store them in .claude/commands/ and commit them to version control. Now everyone’s claude code will have the same workflows.

In Practice: Our most valuable shared commands:

/deploy-checklist: Runs through deployment readiness check, from environment variables to monitoring setup/refactor-to-pattern: Takes messy code and refactors it to match our established patterns, maintaining functionality while improving consistency/commit: Commit code using our internal commit message guidelines./pr: Raise a PR from the feature to the develop branch, using consistent PR naming, change documentation, and messaging.

These commands transform tribal knowledge into executable workflows. Junior engineers get senior-level guidance embedded in their tools. Senior engineers ensure their standards are consistently applied without manual review.

Multi-Agent Architectures

Claude Code recently introduced built-in support for custom agents through the /agents command, making specialized AI configurations even more powerful. Different phases of development benefit from different AI configurations, and now you can create these formally within Claude Code.

The key insight: each agent maintains its own context window, completing tasks and returning only essential information to the main agent. This prevents context pollution that degrades AI performance. Your main agent stays focused on orchestration while subagents handle specific tasks without cluttering the primary conversation.

Effective multi-agent setups typically include:

Planning Agent

- Purpose: System design, architecture decisions, task breakdown (can be further divided into individual agents)

- Configuration: Access to documentation, web search, no write permissions

- Returns: Structured plan and key architectural decisions only

Implementation Agent

- Purpose: Writing code, following specifications

- Configuration: Full codebase access, all tools enabled

- Returns: Summary of changes made and any blockers encountered

Review Agent

- Purpose: Code quality, security audits, best practices enforcement

- Configuration: Read-only access, quality guidelines, anti-patterns documentation

- Returns: List of issues found and specific recommendations

Research Agent

- Purpose: Exploring new libraries, reading documentation, answering technical questions

- Configuration: Web access, minimal project context to avoid bias

- Returns: Concise findings and recommended approaches

Each agent has its own configuration file (similar to CLAUDE.md), allowing fine-tuned behavior for specific tasks.

In Practice: When implementing authentication, the research agent explores OAuth providers and security best practices, the planning agent designs the system architecture, the implementation agent writes the code, and the review agent validates security. Each uses thousands of tokens internally, but your main agent only sees the distilled results. This context isolation is what makes complex projects manageable for your main agent.

Conclusion

The pace of improvement in AI coding tools makes long-term predictions futile. What seems like an AI limitation today might be solved next month. Instead of predicting specific capabilities, I focus on adaptable principles and sharpening skills that will remain valuable regardless of how powerful these tools become.

Things change. Things remain the same.

Software engineering is evolving, but some things remain constant. The most critical work happens before any code gets written: translating vague business requirements into clear technical specifications, making architectural decisions that will scale, and breaking down complex problems into manageable components. This will continue to be a major part of a software engineer’s job.

You remain the human in the room, representing your team and clarifying requirements from stakeholders who often don’t know what they need. This translation layer between human needs and technical implementation becomes more critical as AI handles more of the coding. For complex projects, this role is irreplaceable. Someone needs to ask the right questions, push back on conflicting requirements and unrealistic deadlines, and make judgment calls about technical tradeoffs.

When our data science team requested an “AI-based data extraction pipeline,” my role was diving deeper through technical discussions. What types of models would consume this data? Would they incorporate AI reasoning into their analyses? Would they need structured data for traditional ML or unstructured data for LLMs? Without these conversations, it’s easy to vibe-code a generic system that looks impressive but doesn’t meet actual stakeholder needs. We discovered they needed three distinct pipelines: one for structured financial data feeding into risk models, another for unstructured documents going to LLM agents, and a third for real-time market data. Each had different latency, accuracy, and format requirements.

Similarly, when the product team wanted a “real-time dashboard with status updates for long-running jobs,” my first instinct (and what Claude Code suggested) was implementing WebSocket connections to our backend. But understanding our tech stack mattered more than implementing the obvious solution. Since we were already using Supabase, I leveraged its built-in real-time functionality instead of building a custom WebSocket layer. This saved hours of development and avoided maintaining additional infrastructure. The AI knew how to build real-time systems perfectly, but I knew which solution fit our existing architecture.

New core competencies

Writing code becomes writing specifications. Clear, unambiguous specs that capture both requirements and constraints. Strategic architecture decisions matter more than implementation details. Choosing microservices versus monolith impacts your team for years, while specific code syntax can be refactored in minutes.

Task breakdown and progress tracking become essential skills. AI can handle well-defined tasks brilliantly but struggles with nebulous objectives. Your ability to decompose “build a payment system” into discrete, verifiable tasks determines your project’s success.

Managing AI-generated technical debt requires new vigilance. Traditional debt accumulates slowly and developers feel its pain directly. AI debt accumulates rapidly and silently: duplicated patterns, over-engineered solutions, inconsistent approaches across files. Regular audits and refactoring sessions become mandatory.

Humans needed

AI coding tools represent a fundamental shift in software engineering, not from human to machine, but from implementation to orchestration. Your expertise matters more, not less, in this new landscape.

For coding, the specialist gap persists where training data is scarce. Embedded systems, cutting-edge frameworks, and less popular languages still require deep human expertise. If you’re working with hardware interfaces, implementing novel algorithms, or using niche tools, AI assistance drops dramatically. These specialists become even more valuable as generalist coding becomes commoditized.

Yet paradoxically, these same tools empower generalists to tackle problems previously outside their domain. A backend engineer can now build polished frontends. A frontend developer can set up infrastructure. A data scientist can create production APIs. The key is recognizing AI’s limitations: it excels at common patterns but struggles with edge cases, performance optimization, and domain-specific best practices. Generalists who understand these boundaries can leverage AI to expand their capabilities while knowing when to consult specialists or dive deeper themselves.

Business context and judgment calls remain fundamentally human. Understanding why a feature matters, which technical debt is acceptable given your startup’s runway, when to optimize for performance versus development speed: these decisions require understanding the full context of your business, team, and market. AI can implement any approach brilliantly once you’ve made the decision, but it can’t make that decision for you.

To sum up

The balance is clear: massive productivity gains are possible, but only with vigilant quality maintenance. Experiment boldly with these tools, but review critically. Let AI handle the implementation details while you focus on architecture, stakeholder communication, and strategic decisions.

Start with one principle from this guide. Perhaps writing clearer specifications or setting up review workflows. Measure the impact. Build from there. The teams that thrive will be those who view AI as a powerful partner, not a replacement or competitor.

Embrace these tools for their potential to eliminate the mundane and repetitive, opening up time for strategic thinking and focusing on the human side of solving engineering problems. That’s where our real value has always been.